Originally posted on SogetiLabs:

https://labs.sogeti.com/gitops-primer/

With the rise of cloud, cloud-native and a lot of other buzzwords, we also see the rise of all kinds of Dev(fill here)Ops practices. While I’m an avid advocate of the DevOps mindset, all the buzz around pipelines sounds old-school to me.

What is GitOps?

GitOps evolved from DevOps. The specific state of deployment configuration is version-controlled (using Git for example). Changes to configuration can be managed using code review practices, and can be rolled back using version-controlling.

https://en.wikipedia.org/wiki/DevOps#GitOps

A quick look at Wikipedia brings you the above description. It is stuffed away somewhere in the DevOps article. Like with DevOps, there is a whole religion around what tool is the best to use, and people even claim to be ‘DevOps Engineers’ whatever that may be. In most cases they mean ‘cloud infrastructure’ because DevOps is a mindset, and not a function.

It doesn’t help that some tools like Azure DevOps are actually called this way, but hey, VSTS is also not really a snappy name IMHO.

To explain GitOps in my own words and in a single sentence: Instead of pushing your code and deploy, your system pulls the software from the version control system.

A nice one-liner by WeaveWorks is: GitOps is Continuous Delivery meets Cloud Native

https://www.weave.works/technologies/gitops/

GitOps versus DevOps Pipelines

So, it is the same as DevOps Pipelines right? No it is not. From a distance it can seem to be the same. In a way your code, if configured correctly, will be deployed automatically with CICD.

The fundamental difference is that your system (for example Kubernetes) and not your DevOps CICD pipeline, is in the lead. Remember, the settings of the k8s system itself also need a repository.

Let’s look at a typical CICD setup:

As you can see in the example above, the Azure DevOps pipelines are in the lead. The Build step pulls in the code, the Release step creates the artifact and pushes it towards a registry, and also installs it on AKS. But this does not include the state of your cluster.

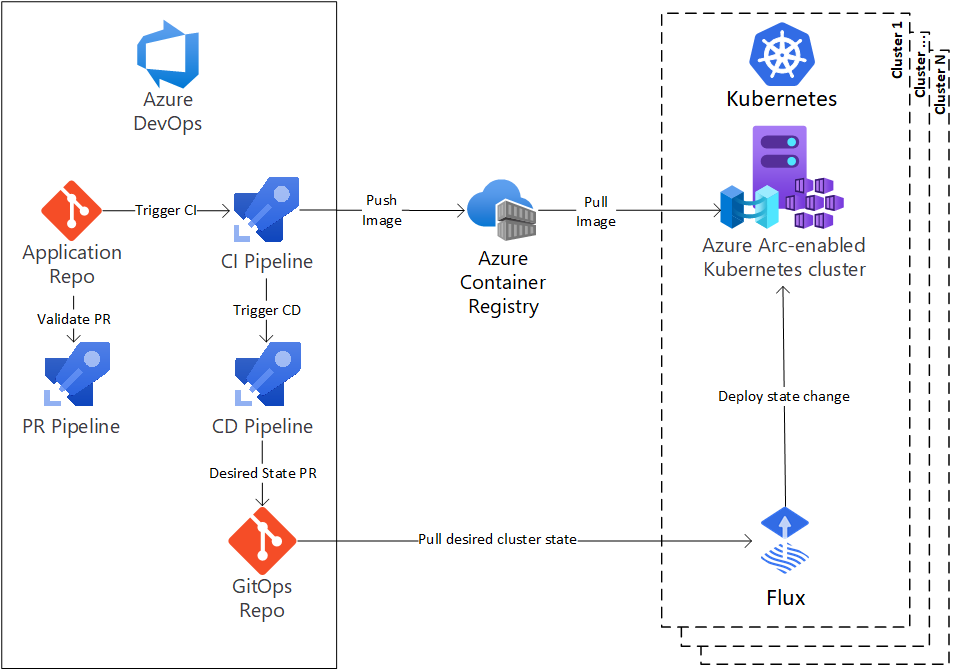

Let’s say your DevOps pipeline trigger failed, or someone made changes manually to the AKS applications or configuration. AKS has no idea where the code is located so it cannot check if there are any changes. This is where GitOps can be a solution. Let’s look at the following example:

In the example above you can see that (in this case Flux) is used to pull the desired state towards your Kubernetes cluster. This pulling makes sure that you are always up-to-date. And it can also detect changes so if anything or anyone changes the configuration manually, this ‘drift’ will be resolved by the desired state pulling with Flux!

Yes, this complicates your setup, but you always know that your clusters are exactly how you described them in the desired state. Especially in situations with Kubernetes, deployment and configuration can be really complex, so these tools make sure you are in control of your systems.

Example of GitOps tools

So I need Kubernetes? No. In theory any system can use the GitOps setup, but for Kubernetes a lot of tools and so called operators are readily available.

In the previous paragraph, Flux is drawn in the picture. There are many more tools out there, but we see convergence on two major tools: Flux andArgo. The resources section at the end of the blog shows more tools and resources if you want to dive in the deep end of GitOps.

There is a nice writeup (sponsored by Red Hat) on deciding between Flux and Argo. Depending on your situation it can fall either way. GitOps on Kubernetes: Deciding Between Argo CD and Flux – The New Stack.

OpenGitOps

The emergence of GitOps led to the OpenGitOps project.

OpenGitOps is a set of open-source standards, best practices, and community-focused education to help organizations adopt a structured, standardized approach to implementing GitOps.

https://opengitops.dev/

The principles of GitOps are four-fold:

- Declarative

A system managed by GitOps must have its desired state expressed declaratively. - Versioned and Immutable

Desired state is stored in a way that enforces immutability, versioning and retains a complete version history. - Pulled Automatically

Software agents automatically pull the desired state declarations from the source. - Continuously Reconciled

Software agents continuously observe actual system state and attempt to apply the desired state.

“The GitOps Working Group is a WG under the CNCF App Delivery SIG.

The focus of the GitOps WG is to clearly define a vendor-neutral, principle-led meaning of GitOps. This will establish a foundation for interoperability between tools, conformance, and certification. Lasting programs, documents, and code are planned to live within the OpenGitOps project.”

The main goal of the OpenGitOps CNCF Sandbox project is to define a vendor-neutral, principle-led meaning of GitOps. This will establish a foundation for interoperability between tools, conformance, and certification through lasting programs, documents, and code.

Concluding

GitOps has been around for several years, and continues to evolve. CNCF has OpenGitOps as Sandbox project and embraced Flux and Argo as Incubating projects. The shift in mindset can be difficult, as some people still try to embrace DevOps as a principle.

Watch this space for more information and an upcoming eBook on our take and practices surrounding GitOps!

Resources and further reading

| OpenGitOps | https://opengitops.dev/ |

| GitOps eBook | https://www.gitops.tech/ |

| Weaveworks Guide to GitOps | https://www.weave.works/technologies/gitops/ |

| Harness | https://harness.io/blog/devops/what-is-gitops/ |

| Flux | https://fluxcd.io/ |

| Argo CD | https://argo-cd.readthedocs.io/en/stable/ |

| Jenkins X | https://jenkins-x.io/ |

| CNCF | https://www.cncf.io/ |